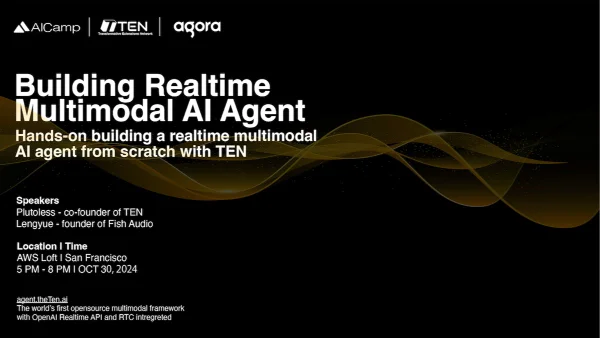

Building Realtime Multimodal AI Agent

** Important: it's REQUIRED to register on the event website for admission.

Description:

Join AICamp and Agora to learn how to create AI agents that can think, listen, see, and interact like humans in real-time!

In this hands-on workshop, you'll explore the TEN framework, experiment with AI services and extensions, and gain the flexibility to build agents for use cases like voice chatbots, AI-generated meeting minutes, language tutors, virtual companions, and more.

Why Attend?

- Learn multimodal AI Agent, OpenAI Realtime API;

- Build your own AI agent from scratch in under an hour;

- Get 1-on-1 consulting and support from the experts;

- Be inspired by live demos showcasing multimodal agents in various scenarios;

- Complete the challenges and win prizes;

- Earn free RTC minutes and contribute to the TEN Agent GitHub repository

Agenda:

- 5:00~5:30pm: Checkin, food and networking

- 5:30~5:50pm: Tech Talk: Multimodal AI agent with the OpenAI Realtime API

- 5:50~6:10pm: Tech Talk: Build voice agent with TEN Framework

- 6:10~8:00pm: Hands-on Workshops

- 8:00pm: Open discussion, Mixer and Closing.

Speakers/Instructors:

- Plutoless, cofounder of TEN Framework, advocate of RTE Developer Community

- Lengyue, founder of Fish Audio (Hanabi AI Inc.)

Prerequisite:

- Bring your laptop (Minimum system requirements: CPU >= 2 Core; RAM >= 4 GB; Docker / Docker Compose; Node.js(LTS) v18.0.0)

Sponsors:

We are actively seeking sponsors to support AI developers community. Whether it is by offering venue spaces, providing food, or cash sponsorship. Sponsors will not only speak at the meetups, receive prominent recognition, but also gain exposure to our extensive membership base of 35,000+ AI developers in San Francisco Bay Area or 400K+ worldwide.

Community on Slack/Discord

- Event chat: chat and connect with speakers and attendees

- Sharing blogs, events, job openings, projects collaborations